TFLite support via TOSAlink

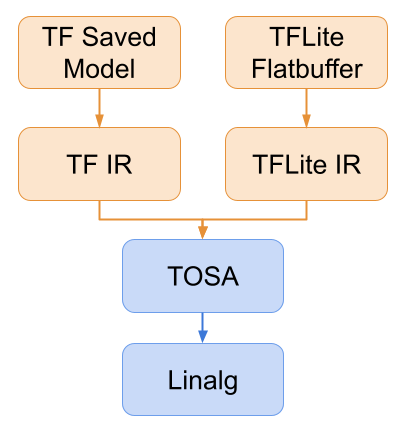

IREE can now execute TensorFlow Lite (TFLite) models through the use of TOSA, an open standard of common tensor operations, and a part of MLIR core. TOSA’s high-level representation of tensor operations provides a common front-end for ingesting models from different frameworks. In this case we ingest a TFLite FlatBuffer and compile it to TOSA IR, which IREE takes as an input format to compile to its various backends.

Using TFLite as a frontend for IREE provides an alternative ingestion method for already existing models that could benefit from IREE’s design. This enables models already designed for on-device inference to have an alternative path for execution without requiring any additional porting, while benefiting from IREE’s improvements in buffer management, work dispatch system, and compact binary format. With continued improvements to IREE/MLIR’s compilation performance, more optimized versions can be compiled and distributed to target devices without an update to the clientside environment.

Today, we have validated floating point support for a variety of models, including mobilenet (v1, v2, and v3) and mobilebert. More work is in progress to support fully quantized models, and TFLite’s hybrid quantization, along with dynamic shape support.

Exampleslink

TFLite with IREE is available in Python and Java. We have a colab notebook that shows how to use IREE’s python bindings and TFLite compiler tools to compile a pre-trained TFLite model from a FlatBuffer and run using IREE. We also have an Android Java app that was forked from an existing TFLite demo app, swapping out the TFLite library for our own AAR. More information on IREE’s TFLite frontend is available here.